*disclaimer* This post is based on my findings so you might experience different stats, but the general guidelines are sound to my knowledge *disclaimer*

NUMA or Non Uniform Memory Access has been around in Intel processors since 2007 when they introduced their Nehalem processors.

NUMA and vNUMA

VMware has since vSphere v5.0 supported that the guest OS is exposed to the NUMA of the underlying processors. It’s automatically enabled if you create a VM that has more than 8 vCPU’s, only requirement is that your VM is at hardware level 8. You can manually edit advanced settings, so that the NUMA topology is exposed even if you have a lower number of vCPU’s. However, it is strongly recommended that you clearly understand how that impacts your VM when you do that.

CPU hot-add and vNuma

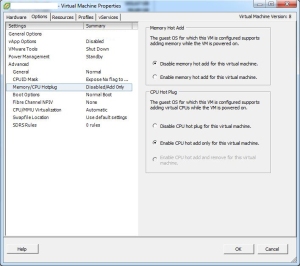

One thing you have to be aware of when thinking about deploying machines that cross NUMA boundaries is that if you enable hot-add cpu on your guest, VMware hides the NUMA information from guest OS, meaning that then the guest OS can’t smartly place applications and memory on the same NUMA node. So for performance intensive systems I would recommend that you turn off the hot-add CPU feature. Hot-add memory is however still working.

Images show Coreinfo run on a 4 CPU machine with 4 cores before and after CPU hot-add was enabled.

Crossing a NUMA boundary.

NUMA is a memory thing, but you can cross a NUMA boundary more than one way. If you have a 4 way machine with 8 core CPU’s and 128 GB of memory, that gives a NUMA boundary at 8 vCPU’s and 32GB of ram, meaning if you create a VM with more than 8 vCPU’s OR more than 32GB’s of ram, then that VM might have to access memory from another CPU.

If you cross a NUMA boundary there is a penalty, which can be shown in part by Coreinfo.

This example is a 4 way guest with 8 cores on each socket for a total af 32 Cores, but runs on a 2×10 core system, so 12 of the cores are run in HT. It shows the penalty that you’re given by access memory thats located on another node, and it has deemed that accessing local memory is the fastest. I have however seen some systems where Node 0 to Node 0 was slower than Node 1 to Node 1.

In normal environments that might not really be a problem as access to Storage or Networking is way slower than accessing memory on another CPU. But in high performance clusters this is something you should consider when building your physical infrastructure. However I’ve spoken to one customer who had seen up to a 35% degradation in overall performance by crossing a NUMA boundary, and because of that they only advises VM’s that can fit into 1 physical CPU and its memory.

Understand your hardware Configurations

One thing you really need to pay attention to is your hardware configuration. Setting the wrong Socket and Core configs in VMware compared to your physical hardware configuration can decrease performance a lot. Mark Achtemichuk has a nice blogpost which shows how different performance can be by selecting various settings in vSphere for Number of virtual sockets and Number of cores per socket.

On top of that your hardware vendor might not be aware that the config they’re selling you is crossing NUMA boundaries. I’ve eg bought 2 CPU blades from Dell that were put on 4-way motherboards, so I could add more CPU’s later. But then to keep the cost down, Dell used 4 GB memory blocks and distributed them evenly across all the banks on the motherboard. Meaning that half my memory can now only be accessed with a penalty. If memory performance is a needed in your environment, then the cost of using 8 GB memory blocks might be worth it.

Microsoft and NUMA support

Since Windows 2003 Enterprise and Datacenter editions, windows server has been NUMA-aware, meaning the OS can schedule its threads so processes that access the same areas of memory are put unto the same NUMA node, this helps reduce the penalty seen by crossing a NUMA boundary. Microsoft SQL Server has NUMA support since 2005, but it seems that is hasnt been fully supported until 2008 R2. But for SQL server it means that each database engine is started on its own NUMA node. Even if there is only 1 database engine it will attempt to start that engine on the second node. This is because the OS is allocating memory on the first node, so NODE 0 has less memory available to other applications than the other Nodes.

For SharePoint farms Microsofts best practice actually advices you NOT to cross NUMA boundaries, but adopt a scale-out option instead. Of course that means selling more SharePoint licenses, but make sure you do test how your performance is impacted when crossing a NUMA boundary on a SharePoint farm.

Links

- https://pubs.vmware.com/vsphere-50/index.jsp?topic=%2Fcom.vmware.vsphere.resmgmt.doc_50%2FGUID-17B629DE-75DF-4C23-B831-08107007FBB9.html

- http://www.benjaminathawes.com/2011/11/09/determining-numa-node-boundaries-for-modern-cpus/

- http://technet.microsoft.com/en-us/library/ff621103(v=office.15).aspx

- http://blogs.vmware.com/vsphere/2013/10/does-corespersocket-affect-performance.html

great post. cant wait to see more

LikeLike